mirror of

https://github.com/rasbt/LLMs-from-scratch.git

synced 2024-11-25 16:22:50 +08:00

|

Some checks failed

Code tests (Linux) / test (push) Has been cancelled

Code tests (macOS) / test (push) Has been cancelled

Test PyTorch 2.0 and 2.5 / test (2.0.1) (push) Has been cancelled

Test PyTorch 2.0 and 2.5 / test (2.5.0) (push) Has been cancelled

Code tests (Windows) / test (push) Has been cancelled

Check hyperlinks / test (push) Has been cancelled

Spell Check / spellcheck (push) Has been cancelled

PEP8 Style checks / flake8 (push) Has been cancelled

* typo & comment - safe -> save - commenting code: batch_size, seq_len = in_idx.shape * comment - adding # NEW for assert num_heads % num_kv_groups == 0 * update memory wording --------- Co-authored-by: rasbt <mail@sebastianraschka.com> |

||

|---|---|---|

| .. | ||

| tests | ||

| config.json | ||

| converting-gpt-to-llama2.ipynb | ||

| converting-llama2-to-llama3.ipynb | ||

| previous_chapters.py | ||

| README.md | ||

| requirements-extra.txt | ||

| standalone-llama32.ipynb | ||

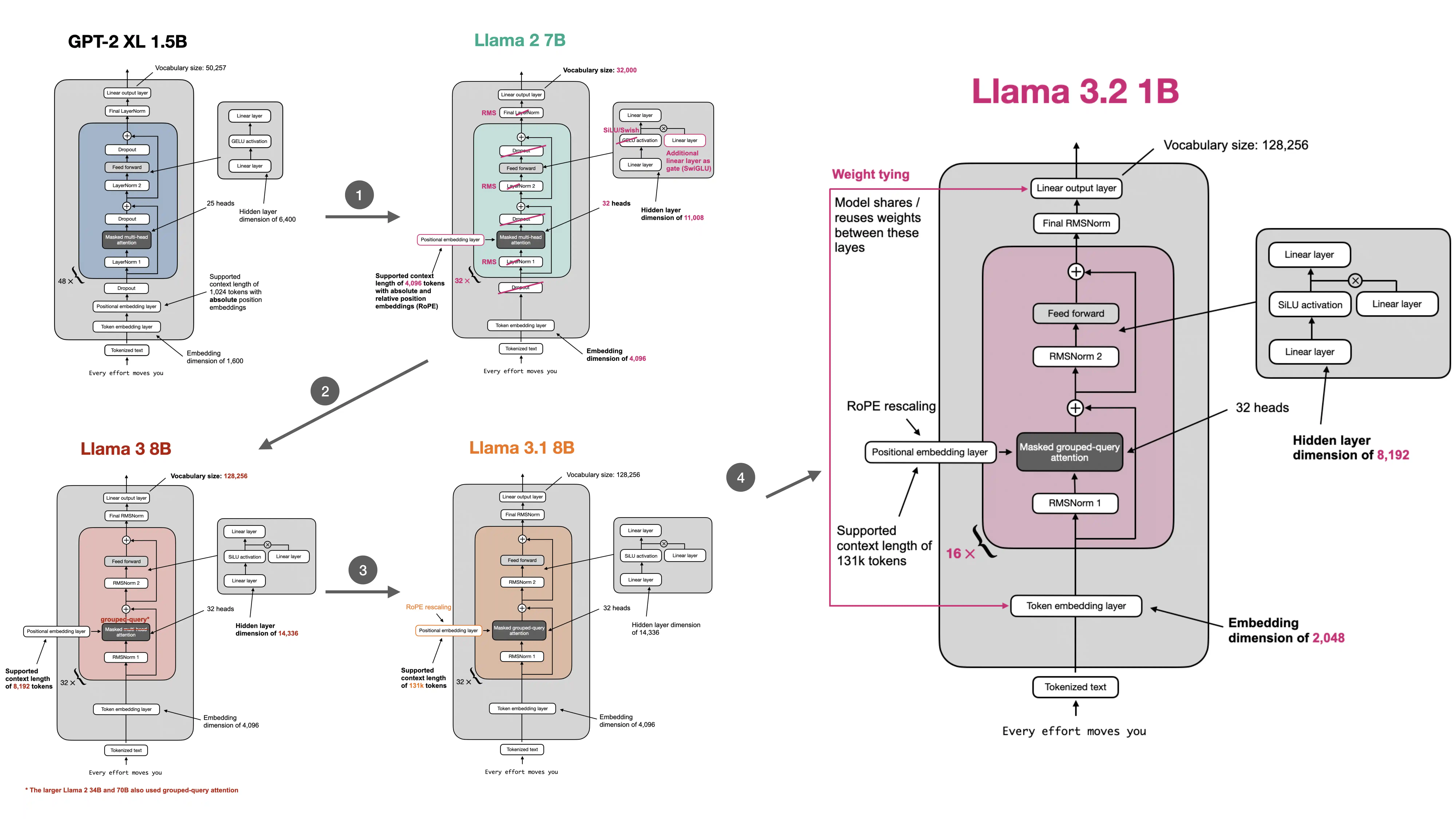

Converting GPT to Llama

This folder contains code for converting the GPT implementation from chapter 4 and 5 to Meta AI's Llama architecture in the following recommended reading order:

- converting-gpt-to-llama2.ipynb: contains code to convert GPT to Llama 2 7B step by step and loads pretrained weights from Meta AI

- converting-llama2-to-llama3.ipynb: contains code to convert the Llama 2 model to Llama 3, Llama 3.1, and Llama 3.2

- standalone-llama32.ipynb: a standalone notebook implementing Llama 3.2