*Description of changes:* Speed up GH workflow by installing CPU-only version of torch By submitting this pull request, I confirm that you can use, modify, copy, and redistribute this contribution, under the terms of your choice. |

||

|---|---|---|

| .github/workflows | ||

| figures | ||

| src/chronos | ||

| test | ||

| .gitignore | ||

| CODE_OF_CONDUCT.md | ||

| CONTRIBUTING.md | ||

| LICENSE | ||

| NOTICE | ||

| pyproject.toml | ||

| README.md | ||

Chronos: Learning the Language of Time Series

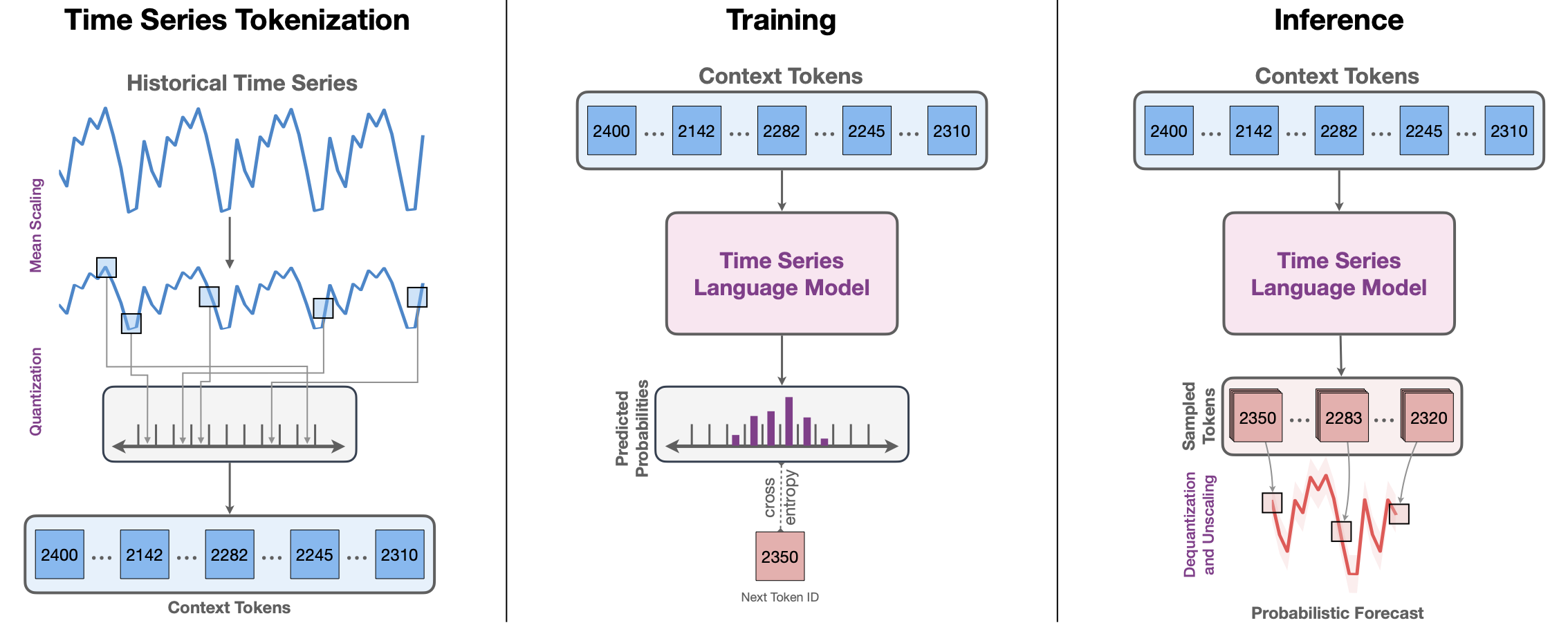

Chronos is a family of pretrained time series forecasting models based on language model architectures. A time series is transformed into a sequence of tokens via scaling and quantization, and a language model is trained on these tokens using the cross-entropy loss. Once trained, probabilistic forecasts are obtained by sampling multiple future trajectories given the historical context. Chronos models have been trained on a large corpus of publicly available time series data, as well as synthetic data generated using Gaussian processes.

For details on Chronos models, training data and procedures, and experimental results, please refer to the paper Chronos: Learning the Language of Time Series.

Fig. 1: High-level depiction of Chronos. (Left) The input time series is scaled and quantized to obtain a sequence of tokens. (Center) The tokens are fed into a language model which may either be an encoder-decoder or a decoder-only model. The model is trained using the cross-entropy loss. (Right) During inference, we autoregressively sample tokens from the model and map them back to numerical values. Multiple trajectories are sampled to obtain a predictive distribution.

Architecture

The models in this repository are based on the T5 architecture. The only difference is in the vocabulary size: Chronos-T5 models use 4096 different tokens, compared to 32128 of the original T5 models, resulting in fewer parameters.

| Model | Parameters | Based on |

|---|---|---|

| chronos-t5-tiny | 8M | t5-efficient-tiny |

| chronos-t5-mini | 20M | t5-efficient-mini |

| chronos-t5-small | 46M | t5-efficient-small |

| chronos-t5-base | 200M | t5-efficient-base |

| chronos-t5-large | 710M | t5-efficient-large |

Usage

To perform inference with Chronos models, install this package by running:

pip install git+https://github.com/amazon-science/chronos-forecasting.git

Forecasting

A minimal example showing how to perform forecasting using Chronos models:

# for plotting, run: pip install pandas matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

from chronos import ChronosPipeline

pipeline = ChronosPipeline.from_pretrained(

"amazon/chronos-t5-small",

device_map="cuda",

torch_dtype=torch.bfloat16,

)

df = pd.read_csv("https://raw.githubusercontent.com/AileenNielsen/TimeSeriesAnalysisWithPython/master/data/AirPassengers.csv")

# context must be either a 1D tensor, a list of 1D tensors,

# or a left-padded 2D tensor with batch as the first dimension

context = torch.tensor(df["#Passengers"])

prediction_length = 12

forecast = pipeline.predict(

context,

prediction_length,

num_samples=20,

temperature=1.0,

top_k=50,

top_p=1.0,

) # forecast shape: [num_series, num_samples, prediction_length]

# visualize the forecast

forecast_index = range(len(df), len(df) + prediction_length)

low, median, high = np.quantile(forecast[0].numpy(), [0.1, 0.5, 0.9], axis=0)

plt.figure(figsize=(8, 4))

plt.plot(df["#Passengers"], color="royalblue", label="historical data")

plt.plot(forecast_index, median, color="tomato", label="median forecast")

plt.fill_between(forecast_index, low, high, color="tomato", alpha=0.3, label="80% prediction interval")

plt.legend()

plt.grid()

plt.show()

Extracting Encoder Embeddings

A minimal example showing how to extract encoder embeddings from Chronos models:

import pandas as pd

import torch

from chronos import ChronosPipeline

pipeline = ChronosPipeline.from_pretrained(

"amazon/chronos-t5-small",

device_map="cuda",

torch_dtype=torch.bfloat16,

)

df = pd.read_csv("https://raw.githubusercontent.com/AileenNielsen/TimeSeriesAnalysisWithPython/master/data/AirPassengers.csv")

# context must be either a 1D tensor, a list of 1D tensors,

# or a left-padded 2D tensor with batch as the first dimension

context = torch.tensor(df["#Passengers"])

embeddings, tokenizer_state = pipeline.embed(context)

Citation

If you find Chronos models useful for your research, please consider citing the associated paper:

@article{ansari2024chronos,

author = {Ansari, Abdul Fatir and Stella, Lorenzo and Turkmen, Caner and Zhang, Xiyuan, and Mercado, Pedro and Shen, Huibin and Shchur, Oleksandr and Rangapuram, Syama Syndar and Pineda Arango, Sebastian and Kapoor, Shubham and Zschiegner, Jasper and Maddix, Danielle C. and Mahoney, Michael W. and Torkkola, Kari and Gordon Wilson, Andrew and Bohlke-Schneider, Michael and Wang, Yuyang},

title = {Chronos: Learning the Language of Time Series},

journal = {arXiv preprint arXiv:2403.07815},

year = {2024}

}

Security

See CONTRIBUTING for more information.

License

This project is licensed under the Apache-2.0 License.